CT2 Contingency Plan

From UG

(→Steps) |

|||

| (19 intermediate revisions not shown) | |||

| Line 19: | Line 19: | ||

* DNS TTL of ct.jaguarfreight.com is by default 24 hours. Should be set to lower value, say 300 seconds. To be done in https://my.rackspace.com, once a week. | * DNS TTL of ct.jaguarfreight.com is by default 24 hours. Should be set to lower value, say 300 seconds. To be done in https://my.rackspace.com, once a week. | ||

* Currently, inbound TMS FTP accounts are the same for dev and production. If dev is to be recovery target, they must not conflict. Accounts should either be made different, or an additional virtual ftp server should be setup on dev. The latter may conflict with ongoing network reshuffle. | * Currently, inbound TMS FTP accounts are the same for dev and production. If dev is to be recovery target, they must not conflict. Accounts should either be made different, or an additional virtual ftp server should be setup on dev. The latter may conflict with ongoing network reshuffle. | ||

| + | |||

| + | ''[Perry] I'm not clear on the above statement regarding TMS FTP accounts. Does TMS use FQDN to connect to us or do they connect via IP address?'' | ||

| + | |||

| + | ''[Vlad] After ct2 is redirected to dev2, both ct2 and dev2 point to the same IP address. Since the same username is used, in both production and dev, files dev and ct files will get mixed. Although dev2 could be redirected to some other address for duration of outage, it's not guaranteed to take effect immediately. So to avoid this kind collision it's better to change account names used in dev (the names are descartes and airdescartes). | ||

| + | |||

* Edit SPF DNS record to authenticate mail sent by dev. | * Edit SPF DNS record to authenticate mail sent by dev. | ||

| + | |||

| + | ''[Perry] Can this be done in advance, without impacting our current production system's ability to send email?'' | ||

| + | |||

| + | ''[Vlad] Already done | ||

| + | |||

| + | * Synchronize database/files backup of production to minimize inconsistency | ||

| + | |||

| + | ''[Perry] What do you mean syncrhonize database/files backups? I thought that we copied the data from production to the NY server every night?'' | ||

| + | |||

| + | ''[Vlad] Backups should be moved closer to each other and away from Rackspace backup. Files should be backed after database. Since backup is resource consuming operation, backup should be started when during period of historically low server utilization. I need to gather some statistics for that. | ||

===Steps=== | ===Steps=== | ||

| Line 30: | Line 45: | ||

* Redirect ct.jaguarfreight.com to 69.74.55.203. Go to https://my.rackspace.com. Account number 22130. Network->Domains->jaguarfreight.com | * Redirect ct.jaguarfreight.com to 69.74.55.203. Go to https://my.rackspace.com. Account number 22130. Network->Domains->jaguarfreight.com | ||

* TBD | * TBD | ||

| + | |||

| + | ''[Perry] I'm assuming the above steps are all to be performed on DEV2?'' | ||

| + | |||

| + | ''[Vlad] Yes, with exception of part to be done on rackspace | ||

[Vlad to complete] | [Vlad to complete] | ||

| Line 48: | Line 67: | ||

Recovery steps...... | Recovery steps...... | ||

| - | ==Normalization | + | ==Normalization== |

===Steps=== | ===Steps=== | ||

* Stop application | * Stop application | ||

| Line 62: | Line 81: | ||

* DNS switch ct.jaguarfreight.com from dev to production | * DNS switch ct.jaguarfreight.com from dev to production | ||

* Copy inbound TMS files to production | * Copy inbound TMS files to production | ||

| + | * Reenable backup of production. On dev2 execute: "rm /.no-ct2-backup" | ||

* TBD | * TBD | ||

| + | |||

| + | ''[Perry] I'm assuming the above Normalization steps are to be executed on DEV2. But what about the steps necessary on CT2 to bring Production back online?'' | ||

| + | |||

| + | ''[Vlad] CT2 will be brought back by Rackspace. They will restore whatever (latest) backup they have. Data from second site will overwrite data from the backup. | ||

| + | |||

[Vlad to complete] | [Vlad to complete] | ||

| Line 79: | Line 104: | ||

Normalization steps... | Normalization steps... | ||

| + | |||

| + | ==Proposed CT2 Disaster Recovery Environment== | ||

| + | |||

| + | ===Objectives=== | ||

| + | |||

| + | * Recovery Time Objective (RTO) - 2 hours to recover in Disaster Recovery environment. | ||

| + | |||

| + | * Recovery Point Objective (RPO) - < 1 hour of potential data loss. | ||

| + | |||

| + | * Eliminate all Single Points of Failure - incorporate redundancies in all nodes. | ||

| + | |||

| + | |||

| + | ====Proposed Architecture (HOT-WARM - Standby Server)==== | ||

| + | |||

| + | * Build warm standby Disaster Recovery environment in either Valley Stream, Rackspace, or another vendor. Standby server will remain IDLE during normal operations. Standby server will only become LIVE in the event of a failover. | ||

| + | |||

| + | * Configure Production MySQL server to asynchronously replicate changes into DR database server. | ||

| + | |||

| + | * Evaluate DNS options from either Rackspace or other vendors to failover traffic to Disaster Recovery site in case of a Production outage. | ||

| + | |||

| + | [[File:CT2 DR Warm Standby.jpg]] | ||

| + | |||

| + | ====Proposed Architecture (HOT-WARM - Secondary Server)==== | ||

| + | |||

| + | * Build secondary server for Disaster Recovery environment in either Rackspace (London) or another vendor in Europe. Failover should be seamless. | ||

| + | |||

| + | * Configure Production MySQL server to asynchronously replicate changes into secondary SLAVE database server. | ||

| + | |||

| + | * Evaluate Geographical-based DNS options from either Rackspace or other vendors to automatically failover traffic to secondary site in case of a Production outage in our Primary site. | ||

| + | |||

| + | * North America (NA) users will be routed to our Primary Site, while Europe/Asia-Pacific (EU/ASPAC) users will be routed to our Secondary Site hosted in Europe. NA users will perform READ/WRITE transactions against primary database. EU/ASPAC users will perform READ transactions against secondary site, and WRITE transactions against primary site. EU/ASPAC users could experience performance improvement in READ transactions, but latency issues in WRITE transactions. | ||

| + | |||

| + | * Latency concerns and Timing issues. Will also require some development work to take into account the network latency between an EU/ASPAC WRITE transaction and an EU/ASPAC READ transaction. | ||

| + | |||

| + | [[File:CT2 DR Secondary Server.jpg]] | ||

| + | |||

| + | ====Proposed Architecture (HOT-HOT - Clustered Servers)==== | ||

| + | |||

| + | * Build secondary server in either Rackspace (London) or another vendor in Europe. This is a high availability design and failover should be seamless. | ||

| + | |||

| + | * Configure both MySQL nodes to synchronously replicate changes to both sites. A MySQL server will be denoted as master and will manage the two-phase commit process between sites. | ||

| + | |||

| + | * Evaluate Geographical-based DNS options from either Rackspace or other vendors to automatically failover traffic to other site in case of any outage. | ||

| + | |||

| + | * North America (NA) users will be routed to our North America Site, while Europe/Asia-Pacific (EU/ASPAC) users will be routed to our European site. | ||

| + | |||

| + | * MySQL Clustering does not support foreign keys. This will require us to redesign the entire CyberTrax2 application. | ||

| + | |||

| + | * Latency concerns and Timing issues. There may be latency concerns surrounding the two-phase commit process as any WRITE transaction will need to commit to all sites before returning control back the Master. | ||

| + | |||

| + | [[File:CT2 DR Cluster.jpg]] | ||

| + | |||

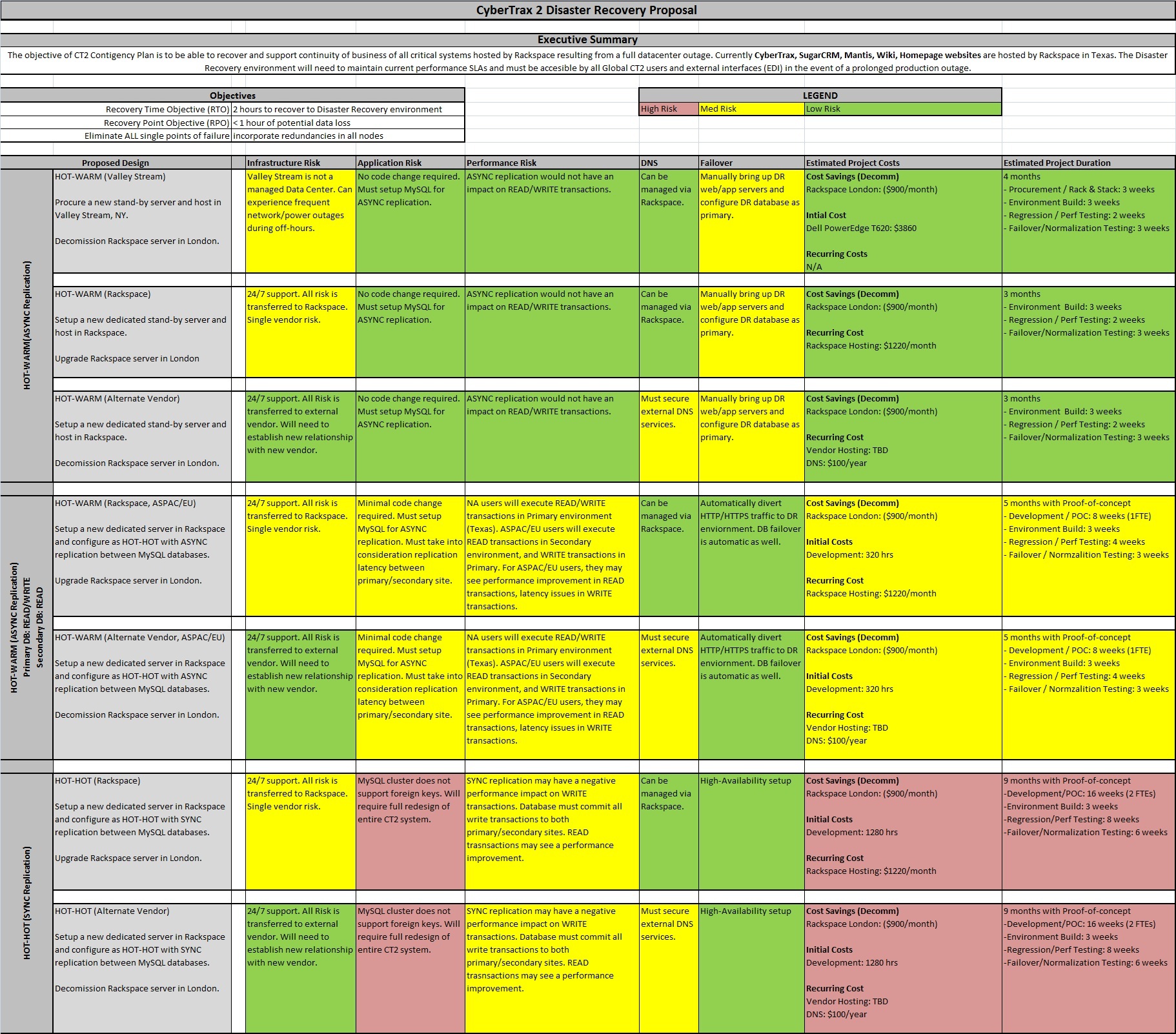

| + | ====Disaster Recovery Proposal and Evaluation Heat map==== | ||

| + | |||

| + | [[File:JFS CT2 DR Proposal v1.jpg]] | ||

Current revision as of 16:48, 16 March 2012

Contents |

[edit] Summary

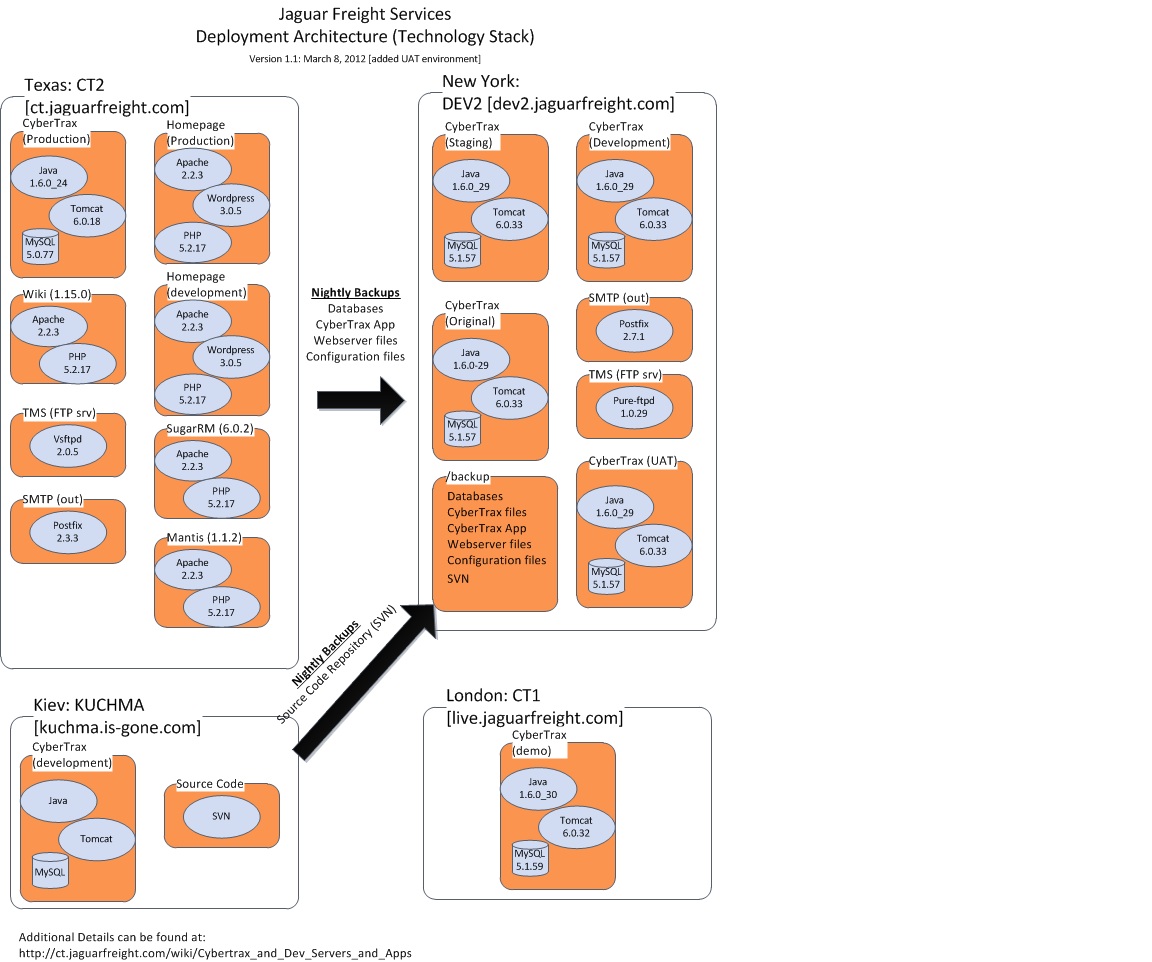

The objective of CT2 Contigency Plan is to be able to recover and support continuity of business of all critical systems hosted by Rackspace resulting from a full datacenter outage. Currently CyberTrax, SugarCRM, Mantis, Wiki, Homepage websites are hosted by Rackspace in Texas. This wiki should document all steps necessary to failover to a Disaster Recovery site (currently designated as Valley Stream, NY office). This includes steps to restore databases, bring up web servers, application servers, FTP servers, and SMTP functionality. Normalization steps (reverting system from the Disaster Recovery back to Production site) will need to be documented as well.

External interfaces including, but not limited to EDI transfers to Trendset, EDI transfers to Descartes (TMS), and all other systems that interface with CyberTrax are in scope for this contingency plan.

At this point, there is no specific Recovery Point Objective (RPO) or Recovery Time Objective (RTO) for this plan. These parameters will be determined at a later time.

[edit] Deployment Architecture/Technology Stack

[edit] Recovery

[edit] Prerequisites

- DNS TTL of ct.jaguarfreight.com is by default 24 hours. Should be set to lower value, say 300 seconds. To be done in https://my.rackspace.com, once a week.

- Currently, inbound TMS FTP accounts are the same for dev and production. If dev is to be recovery target, they must not conflict. Accounts should either be made different, or an additional virtual ftp server should be setup on dev. The latter may conflict with ongoing network reshuffle.

[Perry] I'm not clear on the above statement regarding TMS FTP accounts. Does TMS use FQDN to connect to us or do they connect via IP address?

[Vlad] After ct2 is redirected to dev2, both ct2 and dev2 point to the same IP address. Since the same username is used, in both production and dev, files dev and ct files will get mixed. Although dev2 could be redirected to some other address for duration of outage, it's not guaranteed to take effect immediately. So to avoid this kind collision it's better to change account names used in dev (the names are descartes and airdescartes).

- Edit SPF DNS record to authenticate mail sent by dev.

[Perry] Can this be done in advance, without impacting our current production system's ability to send email?

[Vlad] Already done

- Synchronize database/files backup of production to minimize inconsistency

[Perry] What do you mean syncrhonize database/files backups? I thought that we copied the data from production to the NY server every night?

[Vlad] Backups should be moved closer to each other and away from Rackspace backup. Files should be backed after database. Since backup is resource consuming operation, backup should be started when during period of historically low server utilization. I need to gather some statistics for that.

[edit] Steps

- Stop scheduled backups of production. On dev2 execute: "touch /.no-ct2-backup"

- Check that the latest db backup has been restored on dev2. Go to https://dev2.jaguarfreight.com/LastBackup.html. TBD

- Stop dev, staging environments. Go to https://dev.jaguarfreight.com/manager/html, https://staging.jaguarfreight.com/manager/html, stop /Clinet and /internal apps there

- Stop xlive environment. Go to https://xlive.jaguarfreight.com/manager/html, stop /servlets/cybertrax

- Pull app. files from the latest backup to working directory. On dev2 execute: "mv /var/cybertrax2 /var/cybertrax2.save; XXX /var/cybertrax2". TBD

- Deploy latest WAR files from backup to production. On dev2 execute: "cp /backup/ct2_webapps/2012-02-XXX/*.war /srv/tomcat6/ct"

- Redirect ct.jaguarfreight.com to 69.74.55.203. Go to https://my.rackspace.com. Account number 22130. Network->Domains->jaguarfreight.com

- TBD

[Perry] I'm assuming the above steps are all to be performed on DEV2?

[Vlad] Yes, with exception of part to be done on rackspace

[Vlad to complete]

Estimated recovery time 10 minutes

Database

Web Server

Application Server

FTP server

SMTP server

Recovery steps......

[edit] Normalization

[edit] Steps

- Stop application

- Start dev, staging, xlive environments.

- Backup database

- Compress database

- Copy compressed database to production

- Backup production database for examination later

- Drop production database

- Restore production database from backup

- Save existing TMS files in production for examination later

- Copy outbound TMS files to production

- DNS switch ct.jaguarfreight.com from dev to production

- Copy inbound TMS files to production

- Reenable backup of production. On dev2 execute: "rm /.no-ct2-backup"

- TBD

[Perry] I'm assuming the above Normalization steps are to be executed on DEV2. But what about the steps necessary on CT2 to bring Production back online?

[Vlad] CT2 will be brought back by Rackspace. They will restore whatever (latest) backup they have. Data from second site will overwrite data from the backup.

[Vlad to complete]

Estimated normalization time 2 hours

Database

Web Server

Application Server

FTP server

SMTP server

Normalization steps...

[edit] Proposed CT2 Disaster Recovery Environment

[edit] Objectives

- Recovery Time Objective (RTO) - 2 hours to recover in Disaster Recovery environment.

- Recovery Point Objective (RPO) - < 1 hour of potential data loss.

- Eliminate all Single Points of Failure - incorporate redundancies in all nodes.

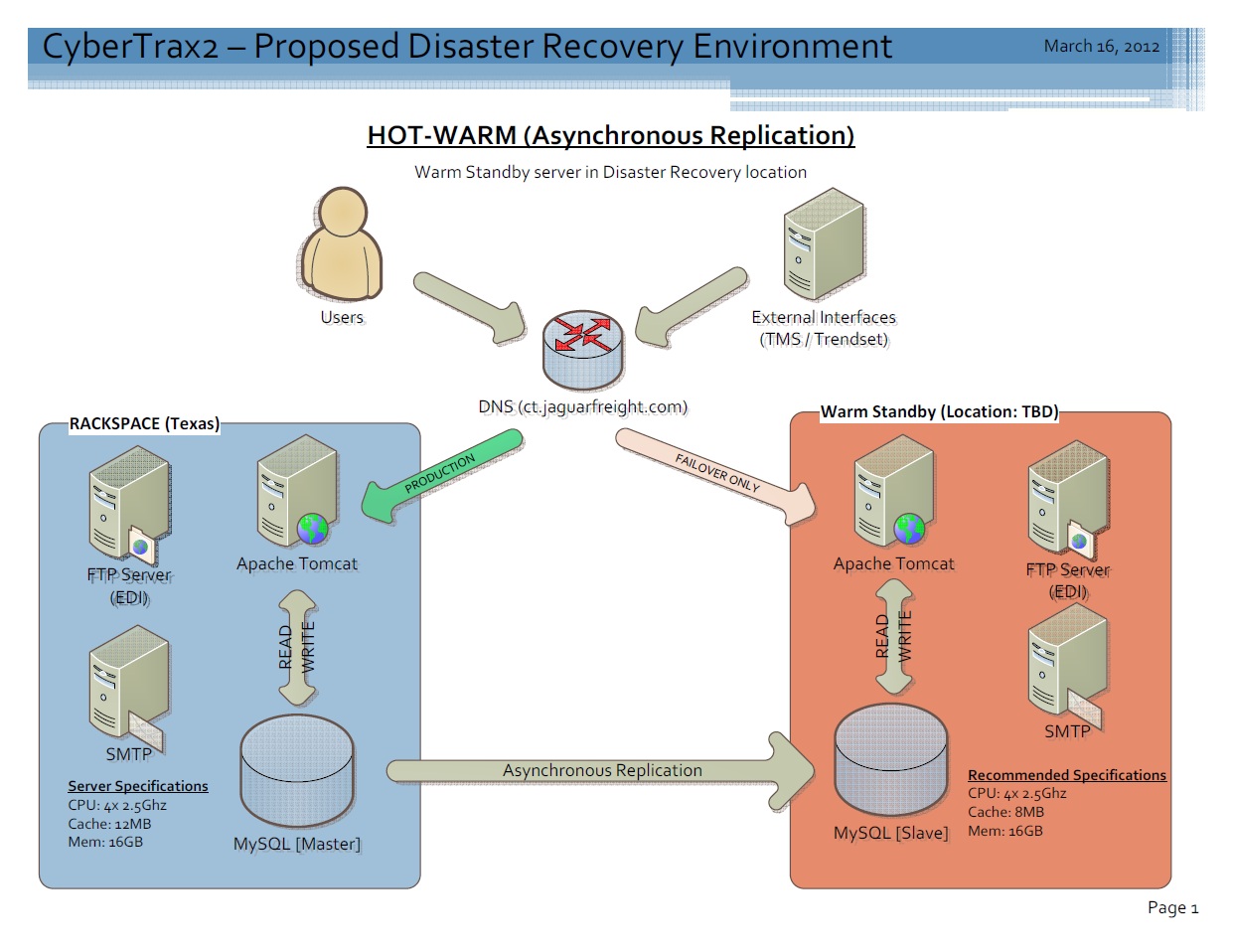

[edit] Proposed Architecture (HOT-WARM - Standby Server)

- Build warm standby Disaster Recovery environment in either Valley Stream, Rackspace, or another vendor. Standby server will remain IDLE during normal operations. Standby server will only become LIVE in the event of a failover.

- Configure Production MySQL server to asynchronously replicate changes into DR database server.

- Evaluate DNS options from either Rackspace or other vendors to failover traffic to Disaster Recovery site in case of a Production outage.

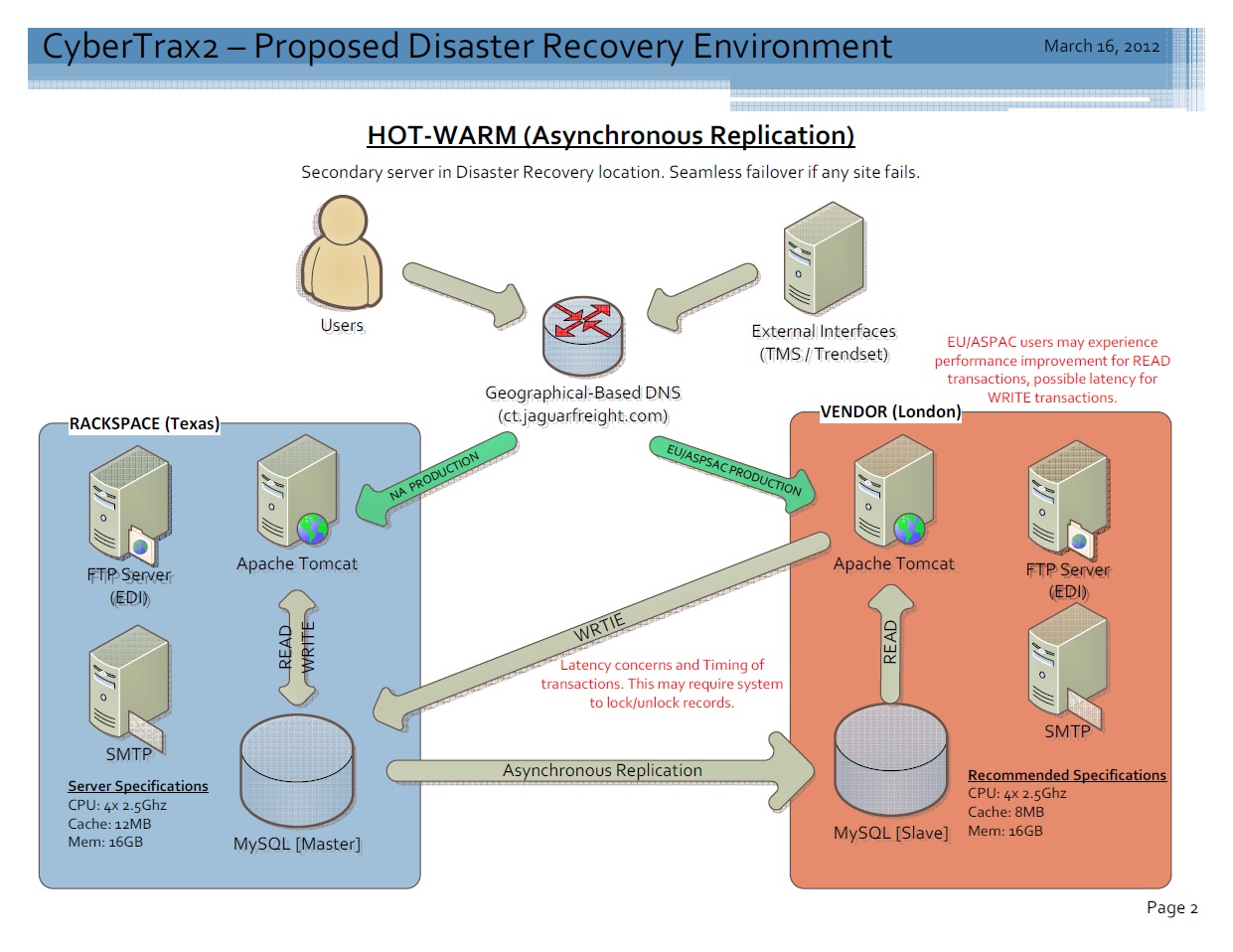

[edit] Proposed Architecture (HOT-WARM - Secondary Server)

- Build secondary server for Disaster Recovery environment in either Rackspace (London) or another vendor in Europe. Failover should be seamless.

- Configure Production MySQL server to asynchronously replicate changes into secondary SLAVE database server.

- Evaluate Geographical-based DNS options from either Rackspace or other vendors to automatically failover traffic to secondary site in case of a Production outage in our Primary site.

- North America (NA) users will be routed to our Primary Site, while Europe/Asia-Pacific (EU/ASPAC) users will be routed to our Secondary Site hosted in Europe. NA users will perform READ/WRITE transactions against primary database. EU/ASPAC users will perform READ transactions against secondary site, and WRITE transactions against primary site. EU/ASPAC users could experience performance improvement in READ transactions, but latency issues in WRITE transactions.

- Latency concerns and Timing issues. Will also require some development work to take into account the network latency between an EU/ASPAC WRITE transaction and an EU/ASPAC READ transaction.

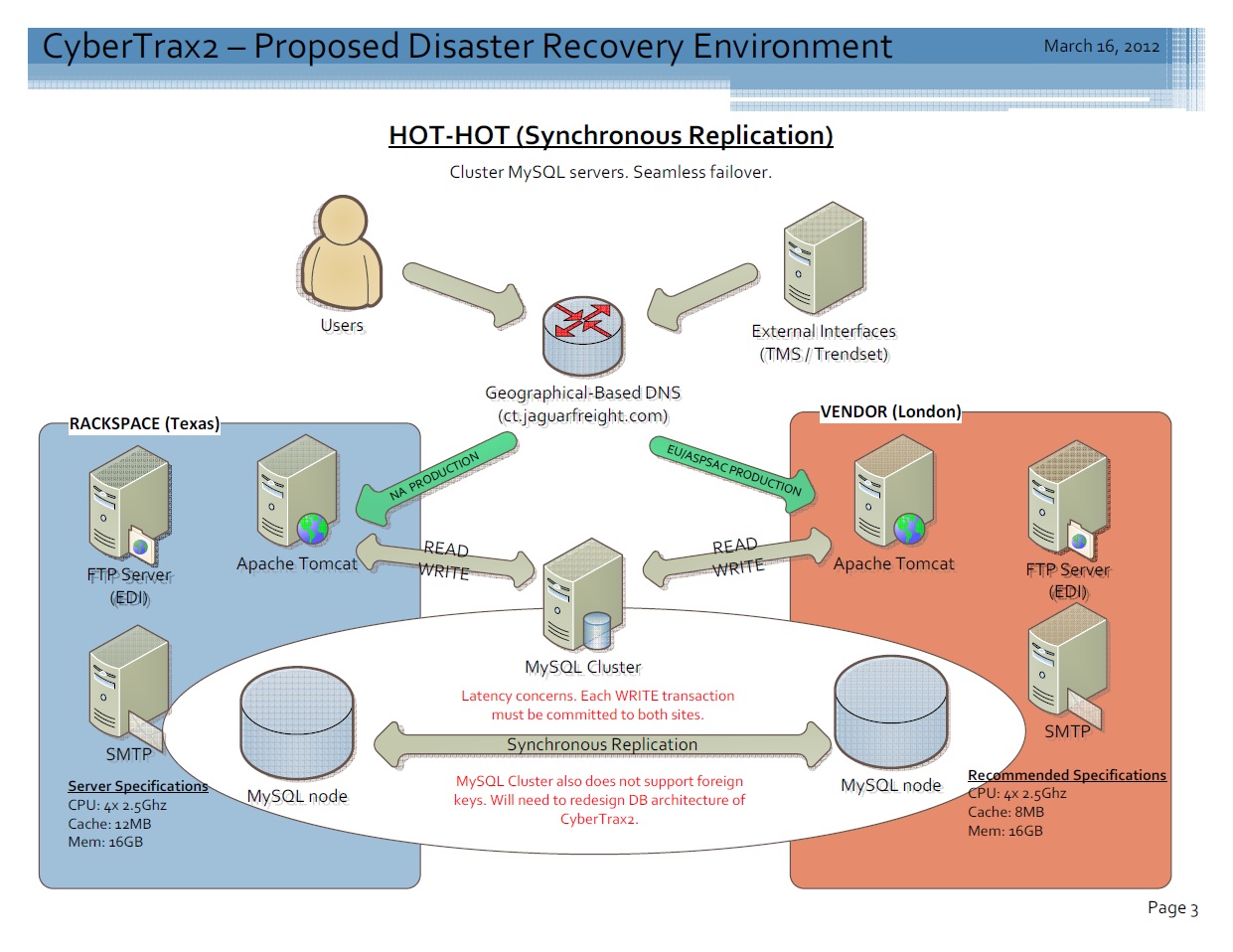

[edit] Proposed Architecture (HOT-HOT - Clustered Servers)

- Build secondary server in either Rackspace (London) or another vendor in Europe. This is a high availability design and failover should be seamless.

- Configure both MySQL nodes to synchronously replicate changes to both sites. A MySQL server will be denoted as master and will manage the two-phase commit process between sites.

- Evaluate Geographical-based DNS options from either Rackspace or other vendors to automatically failover traffic to other site in case of any outage.

- North America (NA) users will be routed to our North America Site, while Europe/Asia-Pacific (EU/ASPAC) users will be routed to our European site.

- MySQL Clustering does not support foreign keys. This will require us to redesign the entire CyberTrax2 application.

- Latency concerns and Timing issues. There may be latency concerns surrounding the two-phase commit process as any WRITE transaction will need to commit to all sites before returning control back the Master.